Information, opinions, misinformation and misinformed and irrational opinions flood us every day and it seems to be accelerating. When I wrote about the lack of comprehensive human rebuttal about Noam Chomsky’s take on AI, it accidentally seeded this post because it’s about something we just don’t seem to have the opportunity to do much of anymore.

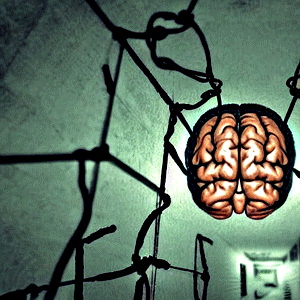

Reflect. Consider. Think things through.

I was looking through Facebook reels as I unfortunately do, and looking at the comments on them. There’s a lot of value signaling going on about videos that don’t give the greater contexts of the situation. It’s amazing, really, how because of that value signaling people go about constructing strong opinions without rationale. In turn, people want views because if you don’t have views, what’s the point of doing it?

For me, the point of doing it would be to share good knowledge. We didn’t get where we are today as a society by not sharing good knowledge, but the signal to noise ratio has become… well, a noise to signal ratio. Add into that the up and coming role of artificial intelligence in the mix, with the US Presidential election coming up and the continued aggression of Russia against Ukraine, the tragic affair of Hamas and the state of Israel with a lot of humans in between… things aren’t going to be better soon.

It’s time to be unpopular. To think things through. To find the meat that is hidden in all this tasty, cholesterol-ridden fat presented to us because people want views, likes, shares, etc. It’s a good analogy, actually, because some people have more trouble with cholesterol than others. If I walked past a steak my cholesterol would increase, so I won’t tell you what I had for dinner last night.

Some people are more gullible than others. Some people are more irrational than others. We know this. We see this every day. I often make jokes about it, as I told a cashier a few days ago in a store, because it’s my way of coping with the gross stupidity that we see.

So how does one become unpopular? It’s pretty simple.

Worry about being wrong. Decide not to do something because you’re not sure the impact it will have. Humanity tends to gravitate to strong opinions, however wrong they are. Marketing tends to maximize that, and marketing has become a part of our lives. I see lots of videos and ‘hot takes’ on Dave Chapelle and Ricky Gervais, but they tend to take them out of context and beat them with an imposed context – and somehow, despite all of that, they are popular and no one stops to consider why. Why are these comedians still held in regard? Because they dared to be unpopular. They, and I dare say this, dare to be authentic, thoughtful, and funny despite how many people think that they are unpopular.

All too often people are too busy value signaling to think about whatever it is. They need to have an opinion before they watch that next video, read that next tweet (It’s Twitter, Elon, it always will be)…

Slow down. That’s how to be unpopular. Think things through before communicating about it. The world will not end if you don’t have an opinion right now.

Once upon a time, there was value in that.

I’ll let you in on a secret: There still is.

Here’s what to do: Watch something/read something. Find out more about it. Think about it in different contexts. Maybe then do something or say something if you think there is value, or maybe just don’t say anything until you do.