We understand things better when we can interact with them and see an effect. A light switch, as an example, is a perfectly good example.

If the light is off, we can assume that the light switch position is in the off position. Lack of electricity makes this flawed, so we look around and see if other things that require electricity are also on.

If the light is on, we can assume the light switch is in the on position.

Simple. Even if we can’t see, we have a 50% chance of getting this right.

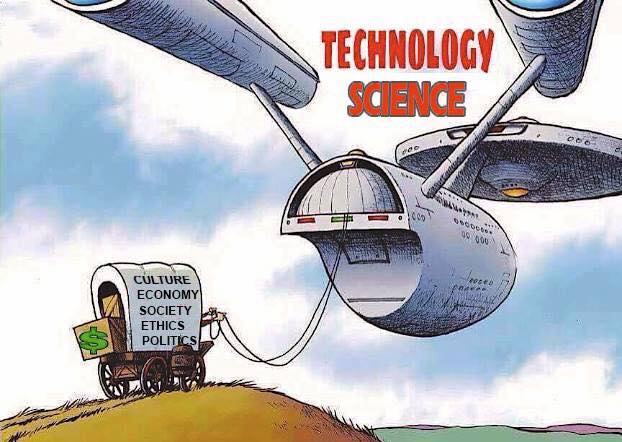

It gets more complicated when we don’t have an immediate effect on something, or can’t have an effect at all. As I wrote about before, we have a lot of stuff that is used every day where the users don’t understand how it works. This is sometimes a problem. Are nuclear reactors safe? Will planting more trees in your yard impact air quality in a significant way?

This is where we end up trusting things. And sometimes, these things require skepticism. The world being flat deserves as much skepticism as it being round, but there’s evidence all around that the world is indeed round. There is little evidence that the world is flat. Why do people still believe the earth is flat?

Shared Reality Evolves.

As a child, we learn by experimentation with things around us. As we grow older, we lean on information and trusted sources more – like teachers and books – to tell us things that are true. My generation was the last before the Internet, and so whatever information we got was peer reviewed, passed the muster of publishers, etc. There were many hoops that had to be jumped through before something went out into the wild.

Yet if we read the same books, magazines, saw the same television shows, we had this shared reality that we had, to an extent, agreed upon, and to another extent in some ways, was forced on us.

The news was about reporting facts. Everyone who had access to the news had access to the same facts, and they could come to their own conclusions, though to say that there wasn’t bias then would be dishonest. It just happened slower, and because it happened slower, more skepticism would come into play so that faking stuff was harder to do.

Enter The Internet

It followed that the early adopters (I was one) were akin to the first car owners because we understood the basics of how things worked. If we wanted a faster connection, we figured out what was slowing our connections and we did it largely without search engines – and then search engines made it easier. Websites with good information were valued, websites with bad information were ignored.

Traditional media increasingly found that the Internet business model was based on advertising, and it didn’t translate as well to the traditional methods of advertising. To stay competitive, some news became opinions and began to spin toward getting advertisers to click on websites. The Internet was full of free information, and they had to compete.

Over a few decades, the Internet became more pervasive, and the move toward mobile phones – which are not used mainly as phones anymore – brought information to us immediately. The advertisers and marketers found that next to certain content, people were more likely to be interested in certain advertising so they started tracking that. They started tracking us and they stored all this information.

Enter Social Media

Soon enough, social media came into being and suddenly you could target and even microtarget based on what people wanted. When people give up their information freely online, and you can take that information and connect it to other things, you can target people based on clusters of things that they pay attention to.

Sure, you could just choose a political spectrum – but you could add religious beliefs, gender/identity, geography, etc, and tweak what people see based on a group they created from actual interactions on the Internet. Sound like science fiction? It’s not.

Instead of a shared reality on one axis, you could target people on multiple axes.

Cambridge Analytica

Enter the Facebook-Cambridge Analytica Data Scandal:

Cambridge Analytica came up with ideas for how to best sway users’ opinions, testing them out by targeting different groups of people on Facebook. It also analyzed Facebook profiles for patterns to build an algorithm to predict how to best target users.

“Cambridge Analytica needed to infect only a narrow sliver of the population, and then it could watch the narrative spread,” Wylie wrote.

Based on this data, Cambridge Analytica chose to target users that were “more prone to impulsive anger or conspiratorial thinking than average citizens.” It used various methods, such as Facebook group posts, ads, sharing articles to provoke or even creating fake Facebook pages like “I Love My Country” to provoke these users.

“The Cambridge Analytica whistleblower explains how the firm used Facebook data to sway elections“, Rosalie Chan, Business Insider (Archived) October 6th, 2019

This had drawn my attention because it impacted the two countries I am linked to; the United States and Trinidad and Tobago. It is known to have impacted the Ted Cruz Campaign (2016), the Donald Trump Presidential Campaign (2016), and interfering in the Trinidad and Tobago Elections (2010).

The timeline of all of that, things were figured out years after the damage had already been done.

The Shared Realities By Algorithm

When you can splinter groups and feed them slightly different or even completely different information, you can impact outcomes, such as elections. In the U.S., you can see it with television channel news biases – Fox news was the first to be noted. When the average attention span of people is now 47 seconds, things like Twitter and Facebook (Technosocial dominant) can make this granularity more and more fine.

Don’t you know at least one person who believe some pretty whacky stuff? Follow them on social media, I guarantee you you’ll see where it’s coming from. And it gets worse now because since AI has become more persuasive than the majority of people and critical thinking has not kept pace.

When you like or share something on social media, ask yourself whether someone has a laser pointer and just adding a red dot to your life.

The Age of Generative AI And Splintered Shared Realities

Recently, people have been worrying about AI in elections and primarily focusing on deepfakes. Yet deepfakes are very niche and haven’t been that successful. This is probably also because it has been the focus, and therefore people are skeptical.

The generative AI we see, large language models (LLMs) were trained largely on Internet content, and what is Internet content largely? You can’t seem to view a web page without it? Advertising. Selling people stuff that they don’t want or need. Persuasively.

And what do sociotechnical dominant social media entities do? Why, they train their AIs on the data available, of course. Wouldn’t you? Of course you would. To imagine that they would never use your information to train an AI requires more imagination than the Muppets on Sesame Street could muster.

Remember when I wrote that AI is more persuasive? Imagine prompting an AI on what sort of messaging would be good for a specific microtarget. Imagine asking it how to persuade people to believe it.

And imagine in a society of averages that the majority of people will be persuaded about it. What is democracy? People forget that it’s about informed conversations and they go straight to the voting because they think that is the measure of a democracy. It’s a measure, and the health of that measure reflects the health of the discussion preceding the vote.

AI can be used – and I’d argue has been used – successfully in this way, much akin to the story of David and Goliath, where David used technology as a magnifier. A slingshot effect. Accurate when done right, multiplying the force and decreasing the striking surface area.

How To Move Beyond It?

Well, first, you have to understand it. You also have to be skeptical about why you’re seeing the content that you do, especially when you agree with it. You also have to understand that, much like drunk driving, you don’t have to be drinking to be a victim.

Next, you have to understand the context other people live in – their shared reality and their reality.

Probably more importantly, is not calling people names because they disagree with you. Calling someone racist or stupid is a recipe for them to stop listening to you.

Where people – including you – can manipulated by what is shown in your feeds by dividing, find the common ground. The things that connect. Don’t let entities divide us. We do that well enough by ourselves without suiting their purposes.

The future should be about what we agree on, our common shared identities, where we can appreciate the nuances of difference. And we can build.